Suppose we propose an experiment to estimate the effect of a treatment X on an outcome Y where Y is a binary 0,1 outcome. Call that effect b. If the base rate is p then the effect b is bounded to be between -p and 1-p.

Our decision problem is to decide whether treatment should be adopted. If so, the reward is b. If not the reward is 0.

Suppose if we run the experiment, we learn b, in which case we can make the optimal choice of adopting the treatment when b>0. Thus if we learn b we could ensure a payoff of max(0,b).

Suppose we collect priors on the value of b, giving rise to a prior distribution F(b). Does this prior distribution tell us about the value of running the proposed experiment to estimate b?

For example, it could be that F(b) is a spike distribution at value b=0, implying ex ante consensus that X has no effect on Y. Suppose we act on the prior, and do not implement. If the prior is exactly right, we obtain the optimal payoff of 0. If prior was actually overconfident such that b is actually negative, then again we secure the optimal payoff of 0. But if the prior was unduly pessimistic, acting on it could miss out on some gain b>0. In the absence of any other information this could be as large as 1-p. The maximum potential regret for not having run the experiment is 1-p. (Regret in this worst case is optimal 1-p minus realized 0.)

Suppose another treatment that has potential c on the same outcome, for which the base rate is still p. As before, if we ran the experiment for this treatment we could secure the optimal payoff of max(0,c). Suppose that for this second treatment however, the priors we obtain give rise to a distribution G(c) that is uniform over the interval 0 to 1-p. The prior expectation is (1-p)/2 > 0. If we acted in the basis of our prior, we would adopt the treatment., securing payoff c. If c>0, we are better off for having done so. If c<0, we would have made a mistake acting on the prior and could do as badly as -p. The maximum potential regret for not having run the experiment is p. (Regret in this worst case is optimal 0 minus realized -p.)

Under all of the assumptions above, including the different priors, the comparative value of experimenting with the first treatment versus the second depends on p.

These thoughts came to mind when listening to presentations by Brian Nosek and by Stefano Dellavigna at the BITSS conference this past week. Nosek presented a pilot project called the Lifecycle Journal, which proposes to use various existing specialized research evaluation services to quality rate facets of a study. You can obtain quality ratings for statistical power, fidelity to a pre analysis plan, and other features. He raised the intriguing possibility of obtaining a quality rating for the experiments that you are proposing to run by eliciting priors about potential effects using the Social Science Prediction Platform (SSPP). The set of quality ratings could be compiled from these separate services and then serve to establish the credibility of a study that sidesteps the need for peer review.

Later at the meeting Dellavigna presented some results from the data that SSPP has collected to date on both priors and experimental outcomes. I asked Dellavigna whether they had considered using the priors to construct ex ante metrics of the value of experiments, and he replied that they had thought about it but they hadn’t yet settled on a decision framework for doing so.

These ideas and the analysis above raise the intriguing possibility of designing a methodology for quantitatively measuring the ex ante (that is, prior to knowing any results) value of a proposed experiment. The inputs could be crowdsourced or expert-determined information about payoffs (the treatment adoption payoffs), base rate information from existing data, and then crowdsourced or expert provided priors about effect sizes (like what SSPP already does). This could put ratings about the quality of experiments on firmer ground, more like ratings about statistical power than the more taste-based judgments that you get from peer reviewers’ subjective assessments.

PS Sandy Gordon and I also presented at BITSS on a new tool called Data-NoMAD that we developed (with Patrick Su) to allow researchers to create a “digital fingerprint” of their data to allow third parties to authenticate that the data have not been manipulated. Working paper here at arXiv.

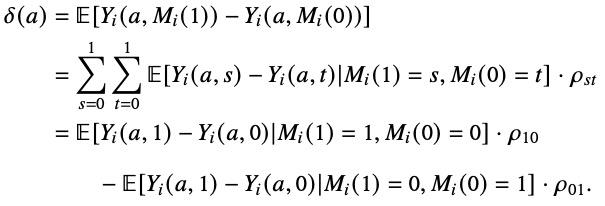

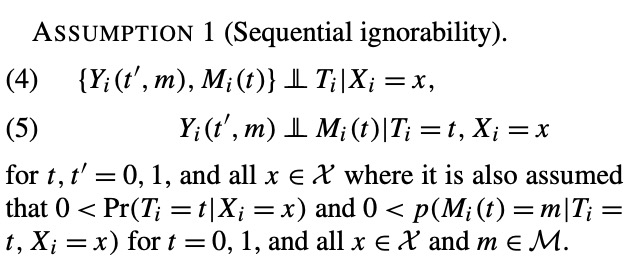

Personally, I had not given much thought to how the second part of the assumption (expression 5), by imposing restrictions across outcome and mediator potential outcomes, implied effect homogeneity across types defined in terms of how the treatment affects mediator values. If we consider the analogy to instrumental variables, this would be like restricting causal effects to be homogenous across compliers and defiers.

Personally, I had not given much thought to how the second part of the assumption (expression 5), by imposing restrictions across outcome and mediator potential outcomes, implied effect homogeneity across types defined in terms of how the treatment affects mediator values. If we consider the analogy to instrumental variables, this would be like restricting causal effects to be homogenous across compliers and defiers.

So we are not quite there with respect to our target graph. That said, the graph that results here is interesting, because it does capture a DGP that characterizes two conditionally independent effects of X1 and X2 on Y, and thus it does capture the effects of a joint intervention of X1 and X2 on Y in circumstances in which Z2 is held fixed. It’s just that the mediation pathway between X1 and Y is obscured relative to our target graph.

So we are not quite there with respect to our target graph. That said, the graph that results here is interesting, because it does capture a DGP that characterizes two conditionally independent effects of X1 and X2 on Y, and thus it does capture the effects of a joint intervention of X1 and X2 on Y in circumstances in which Z2 is held fixed. It’s just that the mediation pathway between X1 and Y is obscured relative to our target graph. Here, we have a DGP that is clean for the effect of X1 on Y, but the effect of X2 on Y is confounded by a backdoor path.

Here, we have a DGP that is clean for the effect of X1 on Y, but the effect of X2 on Y is confounded by a backdoor path. The variable U is exogenous, and so we can remove it from the graph. This gets us to our target intervention graph, and represents a solution to the problem.

The variable U is exogenous, and so we can remove it from the graph. This gets us to our target intervention graph, and represents a solution to the problem. Writing out “Z1=z1, Z2=z2” by the graph makes it clear that these are conditional relationships on the actual DGP, and that marginalization (with respect to z1 and z2) would be needed to get from this to the effects that target intervention graph represents in the population.

Writing out “Z1=z1, Z2=z2” by the graph makes it clear that these are conditional relationships on the actual DGP, and that marginalization (with respect to z1 and z2) would be needed to get from this to the effects that target intervention graph represents in the population.